I subscribe to quite a few mailing lists, for various topics and reasons. One in particular that catches my attention more often than others is the

Security Basics list. This is one of several

Security Focus lists that I subscribe to. If you're looking for some lists, this is a good place to start.

One topic in particular that caught my eye today was a question titled simply: "Length vs Complexity." Being a fan of looking at the complexity of things, I decided to see what this was all about.

Users hear constantly that they should add complexity to their passwords, but from the math of it doesn't length beat complexity (assuming they don't just choose a long word)? This is not to suggest they should not use special characters, but simply that something like Security.Basics.List would provide better security than D*3ft!7z. Is that correct?

Responses ranged from people asserting that increasing the length was better than increasing the keyspace (number of possible characters) to other people saying just the opposite.

The main criticism towards longer passwords that were easy to remember (multiple words, instead of random characters) was that it is still subject to dictionary attacks. Granted, it is more difficult to pull off a dictionary attack when it isn't just one word, as you do have to try more possibilities, or possibly have some foreknowledge of the type of password used. People saying this approach was not better were leaning towards saying that a true brute force approach would most likely not be the first thing tried.

Conversely, people saying that the "D*3ft!7z" example was weaker used the argument that it had fewer bits than a longer password with the same keyspace.

I fall in line with the first group. The problem with using bit strength as your metric for password security is that saying a password has a certain amount of bit entropy means you're assuming that each character was randomly generated separate from the other characters. Without making this assumption, you cannot say that a longer password always has more bits of entropy than a shorter one. This is what entropy means.

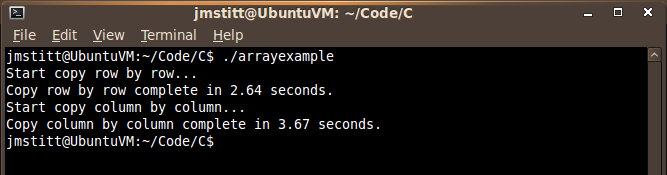

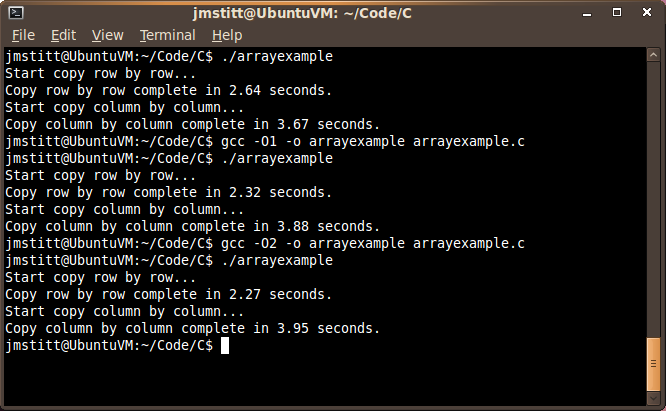

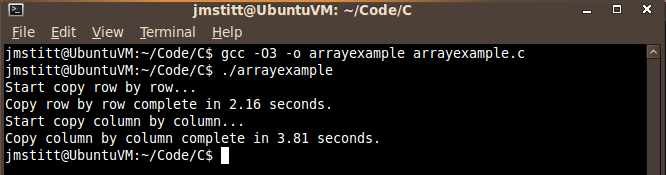

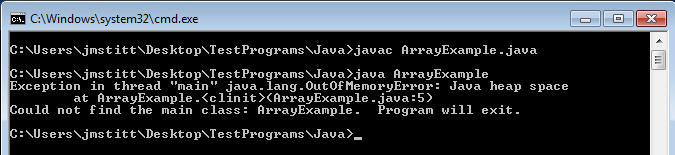

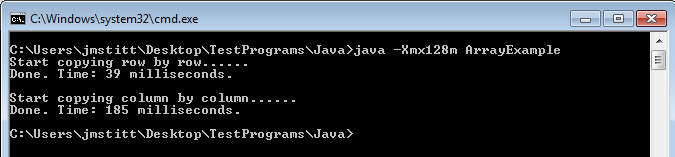

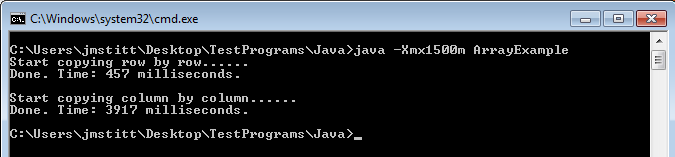

A few months ago, I wrote a short script in Java to easily do the math on password bit strength. The aforementioned problem is quickly evident when you run the program:

The program could be made more complex. Tests could be added checking to see if all possible types of characters for the given keyspace (numbers and letters; numbers, letters, and special characters; etc.) are actually used. In most cases, this would give a more true value of entropy in the end. Notice I said "in most cases," as this still would not completely solve the problem.

For example, say we have two passwords, "AAAA" and "AFIS" used by two members of Example.com. Example.com only allows users to use capital letters in their passwords, disallowing numbers and special characters. Now, a dictionary attack on these passwords may return no results, but when brute forcing the passwords, it is plainly evident that AAAA is going to be found much faster than AFIS.

Both passwords technically have the same amount of bits, and both passwords technically used all the character types available, but because AFIS was generated randomly, it has more entropy than AAAA.

Testing for randomness is quite a bit more difficult, and I'm not entirely sure you can completely say something is truly random. Though, by doing the best we can, we will, without a doubt, have much stronger passwords.

Nowadays, with the use of GPU's for password cracking, Rainbow Tables, massive botnets, and other forms of computation available to those who would seek to crack people's passwords, it is nearly impossible to say a password really is "secure." All we can hope is that it is "secure enough." The password system is inherently broken, but it will be a long time coming, if it ever does, before we see another system completely replace it that does not suffer from it's own shortcomings.

You can find the thread from the mailing list

here.